AI Hallucinations

(2025-08-12T03:41.237Z)

Perplexity Query

(2025-08-12T03:41

.237Z)

Question:

Write a comprehensive one-page article about "AI Hallucinations".

Response from sonar-pro:

How and why AI hallucinates

Introduction

AI hallucinations are errors where an AI system produces confident but incorrect, misleading, or fabricated content, such as nonexistent facts, sources, code, or images.

[46qmee]

They matter because generative AI is increasingly used in education, healthcare, business, and media, where plausibly written falsehoods can misinform users, harm reputations, and erode trust.

[634vgu]

At their core, hallucinations stem from how Generative AI models work: Large Language Models (LLMs) predict the next token from patterns in data rather than “knowing” ground truth, so they can fill gaps or smooth over uncertainty with fluent but false statements.

[bfu7vm]

This manifests across media types: text models may invent citations or events; image generators may add anatomical errors like extra fingers; and audio models may introduce artifacts when reconstructing waveforms from spectrograms.

[46qmee]

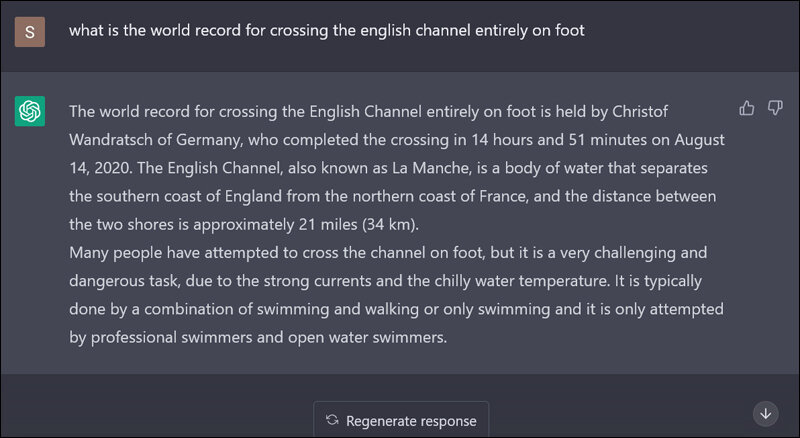

Practical examples illustrate the range and risk. Text chatbots have fabricated scholarly references that do not exist, presenting them as real, a common failure in academic contexts.

[46qmee]

Case studies include defamatory fabrications about individuals—mixing real details with made‑up crimes—illustrating how unverified generation can cause serious harm.

[3f95n2]

In audio, even strong models like Whisper can “hear” nonexistent phrases in noisy input, likely overgeneralizing when uncertain, which is dangerous in medical transcription.

[3f95n2]

Despite risks, generative AI offers benefits when paired with safeguards. Useful applications include drafting and summarization with verifiable sources, coding assistance with unit tests, and creative ideation where factual precision is less critical.

[634vgu]

In vision and audio, models enable rapid concept art, localization, and accessibility features like captioning—provided outputs are reviewed.

[46qmee]

Effective mitigation combines techniques: human‑in‑the‑loop review in high‑stakes tasks, constrained generation (schemas, retrieval‑augmented generation, tool use), domain‑specific data curation, toxicity and prompt filtering, and entity‑level fact validation against trusted knowledge bases before display.

[3f95n2]

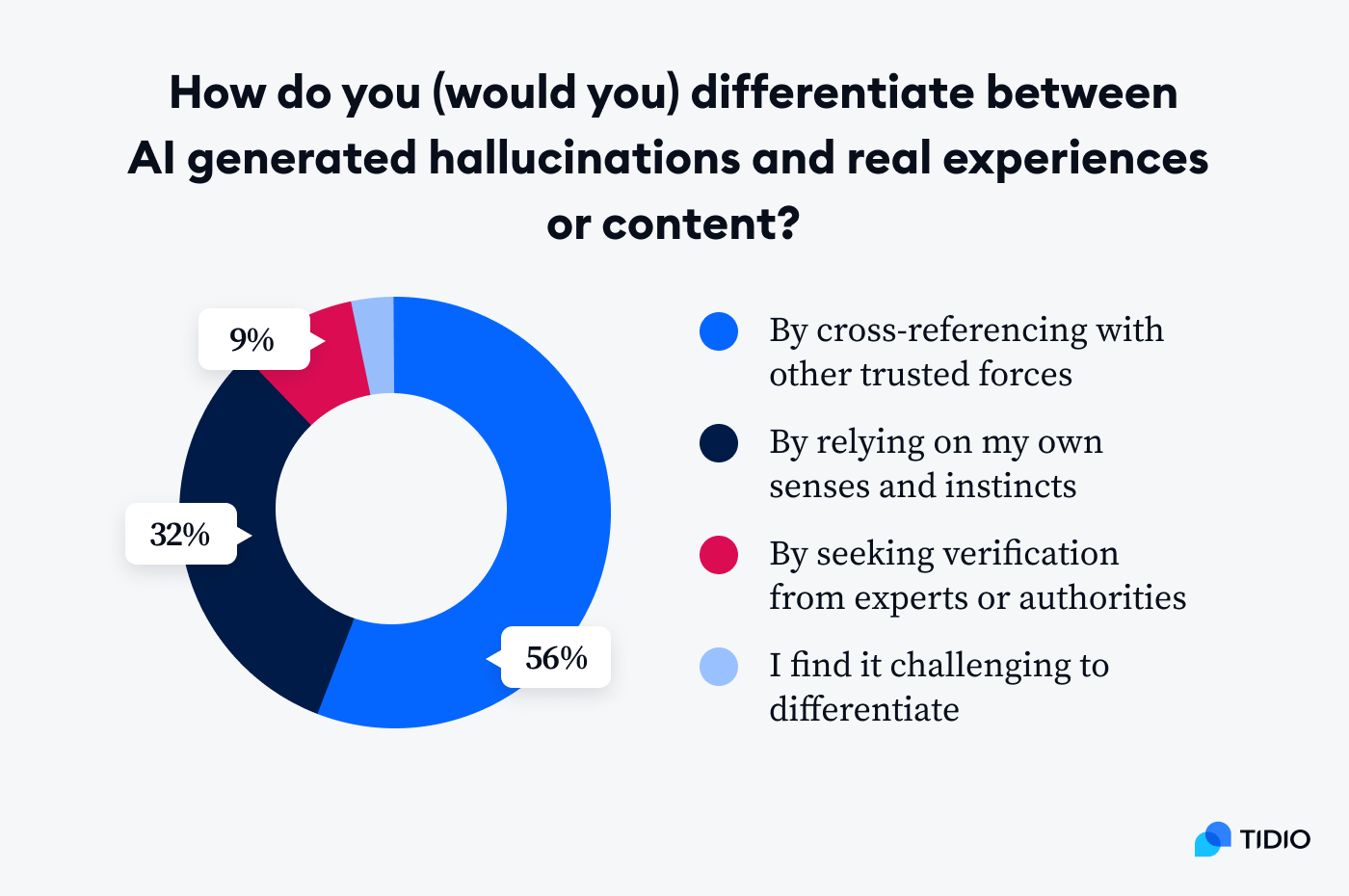

Educating users to verify citations and to ask models to show sources or admit uncertainty further reduces downstream errors.

[46qmee]

Challenges center on reliability, attribution, and safety. LLMs lack integrated truth verification and can oversimplify patterns, producing logical contradictions or plausible but false connections.

[bfu7vm]

Systems that learn from user inputs risk being steered into harmful outputs without robust guardrails, as seen in historical chatbot failures.

[3f95n2]

Organizations must balance speed with governance—tracking provenance, auditing prompts and outputs, and defining escalation paths for critical use cases.

[634vgu]

Current State and Trends

Adoption is widespread, but production deployments increasingly rely on Retrieval-Augmented Generation, Prompt Engineering, and structured output validation to curb hallucinations, especially in regulated domains like finance and health.

[634vgu]

Education and research communities emphasize teaching about hallucinations and how to detect fabricated citations, reflecting a shift toward “AI literacy.”

[46qmee]

Key players include providers of LLMs and tooling, along with platforms offering data curation, filtering, and evaluation for hallucination control; emerging best practices include entity verification, domain ontologies, and human review for high‑risk scenarios.

[3f95n2]

Recent developments highlight clearer taxonomies—factual, logical, fabricated citations, and creative hallucinations—and guidance that models do not inherently “know truth,” spurring investment in verification layers rather than solely larger models.

[bfu7vm]

Future Outlook

Expect tighter integration of retrieval, calculators, and APIs for grounded answers; stronger output validators that check claims and citations before display; and domain‑tuned models with curated data and guardrails, making hallucinations rarer in critical workflows while remaining a feature of open‑ended creative generation.

[bfu7vm]

These advances will likely shift AI from persuasive but fallible assistants toward systems that separate generation from verification, improving trust and accountability.

[3f95n2]

Conclusion

AI hallucinations are a predictable byproduct of pattern‑matching generators and must be managed with verification, constraints, and oversight.

[bfu7vm]

As tooling and practices mature, everyday use will become safer, unlocking value without sacrificing reliability.

[3f95n2]

Citations

[46qmee] 2025, Jun 15. LibGuides: Introduction to Generative AI: Hallucinations. Published: 2025-07-16 | Updated: 2025-06-16

[bfu7vm] 2025, Jul 23. What is AI Hallucination? Understanding and Mitigating AI .... Published: 2025-07-23 | Updated: 2025-07-24

[634vgu] 2025, Jul 28. What Is AI Hallucination and How to Avoid It: Everything You Need .... Published: 2025-07-13 | Updated: 2025-07-29

[3f95n2] 2025, Jul 14. Why Did My AI Lie? Understanding and Managing Hallucinations in .... Published: 2025-07-15 | Updated: 2025-07-15