Software Development with Code Generators

What is AI Even Good At?

Command Line Ninja Moves

HTML & CSS

Checking Web Pages for Anything

Filler

Boilerplate

Chores

- Renaming a hundred files

- Scanning a hundred files to look for a single line

- Reordering a hundred citations.

- Taking a document or outline and making a halfway decent presentation out of it.

- Rewriting a hundred bullet points to make it look not plagiarized.

Pleasant Surprises

Applying Market Standard Industry Conventions

Suggestions

Example Specification Suggestions

---

PM Review: Gaps & Suggested Additions

1. Platform Coverage is Incomplete

The blueprint focuses heavily on Twitter/X but misses:

- LinkedIn - Huge for B2B; uses OG tags but displays images differently (crops to different ratios)

- WhatsApp/iMessage - Dominant for "share" buttons on mobile; WhatsApp caches aggressively and is hard to debug

- Slack/Discord - Professional contexts; have their own preview quirks

Suggested addition: A "Platform-Specific Considerations" section.

2. No Character Limits Mentioned

Platforms truncate differently:

- og:title: ~60-70 chars before truncation

- og:description: ~160-200 chars

- Twitter title: 70 chars

Suggested addition: Add truncation guidance or a helper that enforces limits.

3. Missing Debugging/Validation URLs

The doc says "use social validators" but doesn't list them:

- Facebook: https://developers.facebook.com/tools/debug/

- LinkedIn: https://www.linkedin.com/post-inspector/

- Twitter: https://cards-dev.twitter.com/validator

Suggested addition: Concrete validator URLs in the Validation section.

4. Image Fallback Chain Not Defined

What's the cascade when shareImage is missing?

- Page-specific image → Collection default → Site default?

- Should certain content types (articles vs. profiles) have different defaults?

5. Article-Specific Properties Missing

For blog/news content, these matter:

- article:published_time

- article:modified_time

- article:author

- article:section

6. No Mention of JSON-LD/Structured Data

OG tags and Schema.org/JSON-LD serve different purposes but are complementary. Worth noting the relationship.

7. og:locale for Multi-Language

The doc mentions i18n but doesn't show og:locale or og:locale:alternate tags.Design System Conventions

Diagrams as Code

Scanning Large Codebases

Interpreting Error Messages

Giving Beginners Courage

Rapid Documentation

Robust Git Commits

Robust Code Comments

UI & Design Variants

Responsive Front-End

Appropriate Animations

Foreign Frameworks and Libraries (that are in Wide Use)

Picking Up where You Left Off

Being Cooperative

Boosting Confidence

Iteration Stamina

Rupture and Repair

Markdown & JSON & CSV

Scanning and Reading Documentation

What is AI Bad At?

Completing a complex task you gave it

Being honest

Limitations of AI Code Assistants and Code Generator AI

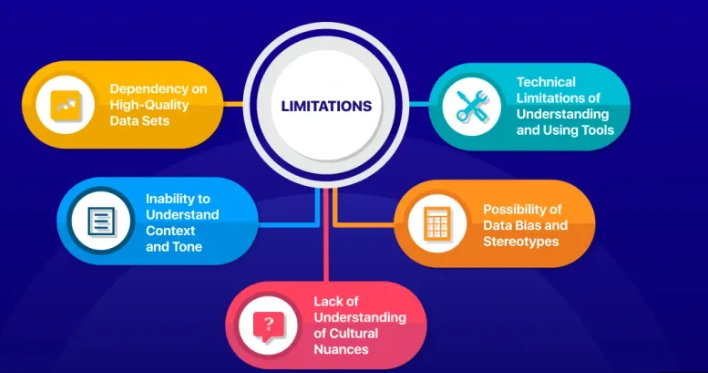

Key Limitations of AI Code Assistants

A diagram depicting key limitation categories: Context, Creativity, Complexity, Security, Collaboration, and Compliance.

A diagram depicting key limitation categories: Context, Creativity, Complexity, Security, Collaboration, and Compliance.- Handling Edge Cases: Rare scenarios, multi-step error handling, and intricate algorithms often stump AI, resulting in incorrect or suboptimal code that requires manual correction. [9f363s]

- Collaboration Breakdown: AI-generated code discourages peer learning and team discussion, sometimes causing confusion about intent or logic. [ucph8r]

- Increased Dependency Risks: Overreliance can erode skills and discourage developers from deeply engaging with the codebase. [ucph8r]

- Security and Compliance: AI-generated code has been shown to leak secrets, bypass reviews, and increase critical vulnerabilities, with additional risks when handling sensitive data and compliance mandates. [vk4uhi]

Why Some Engineers Are Giving Up on Vibe Coding

A split image: professional engineers reviewing an AI-generated pull request filled with questionable code, side-by-side with increased security flags and reviewer comments.

A split image: professional engineers reviewing an AI-generated pull request filled with questionable code, side-by-side with increased security flags and reviewer comments.- Review Overload: Apiiro's 2024 research found pull requests with AI code required 60% more review comments—especially on security issues—creating review fatigue and slowing delivery. [vk4uhi]

- Higher Vulnerability Rates: Projects using AI assistants saw a 2.5x increase in critical vulnerabilities, faster code merges (often bypassing human checks), and a 40% jump in secrets exposure. [vk4uhi]

- Productivity Paradox: Contrary to claims, recent studies show experienced developers took about 19% longer to finish issues when using AI tools—the time lost to fixing, checking, or refactoring AI-generated code often outweighs purported efficiency gains. [7rrgpy]

Ongoing Responses and Mitigation Strategies

A flowchart showing improved human-in-the-loop code workflows, robust context feeding, integrated security scan, and manual code review checkpoints.

A flowchart showing improved human-in-the-loop code workflows, robust context feeding, integrated security scan, and manual code review checkpoints.- Tool Improvements: Vendors are building better feedback loops, transparency features (allowing the AI to indicate confidence or request confirmation), and improved static analysis integration to catch hallucinations and vulnerabilities earlier. [17262x]

- Security-First Defaults: Organizations are closing AI integration gaps by masking secrets, enforcing compliance, and running security scanners on all AI-generated code before deployment. [vk4uhi]

Citations

[ucph8r] 2025, Oct 12. 6 limitations of AI code assistants and why developers should be .... Published: 2025-02-19 | Updated: 2025-10-12

[9f363s] 2025, Oct 09. Limitations of AI Coding Assistants: What You Need to Know. Published: 2025-09-22 | Updated: 2025-10-09

[17262x] 2025, Oct 12. Can AI really code? Study maps the roadblocks to ... - MIT News. Published: 2025-07-16 | Updated: 2025-10-12

[8hy165] 2025, Oct 12. Why Your AI Coding Assistant Keeps Doing It Wrong, and How To .... Published: 2025-05-22 | Updated: 2025-10-12

[vk4uhi] 2025, Oct 12. The Productivity Paradox of AI Coding Assistants | Cerbos. Published: 2025-09-12 | Updated: 2025-10-12

[7rrgpy] 2025, Oct 12. Measuring the Impact of Early-2025 AI on Experienced ... - METR. Published: 2025-07-10 | Updated: 2025-10-12

[a7gd38] 2025, Oct 11. The Essential Guide to AI Coding: What Actually Works in 2025. Published: 2025-04-10 | Updated: 2025-10-11