Vector Embeddings

(2025-09-23T11:18.697Z)

Perplexity Query

(2025-09-23T11:18

.697Z)

Question:

Write a comprehensive one-page article about "Vector Embeddings".

Model: sonar-pro

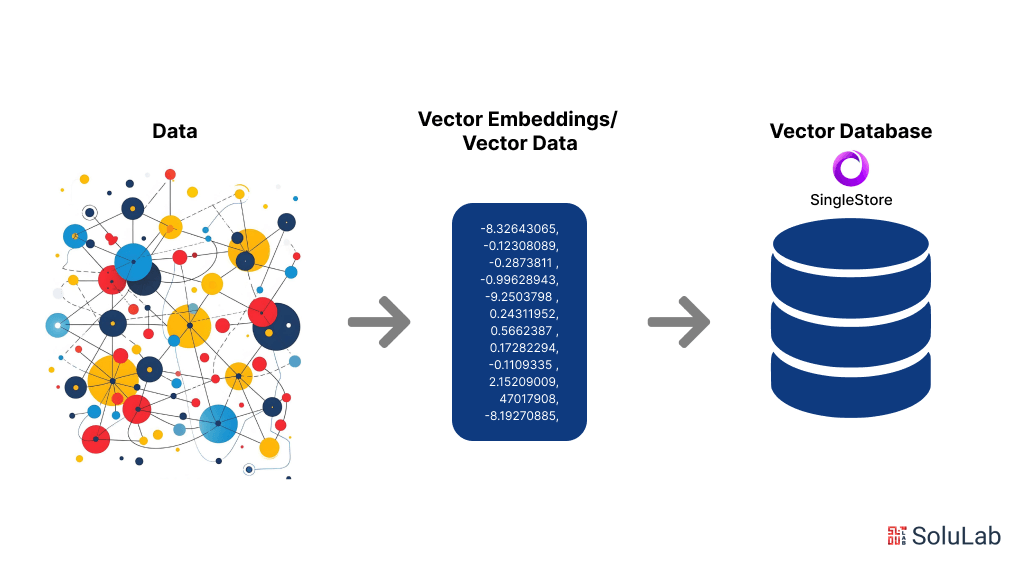

Vector embeddings are numerical representations of complex data—such as words, images, or user behaviors—converted into vectors (arrays of numbers) that enable machine learning models to efficiently interpret and process information.

[5rpwv2]

[8pdi30]

This technique is fundamental for modern artificial intelligence (AI) and machine learning, powering everything from search engines and voice assistants to recommendation systems.

What Are Vector Embeddings?

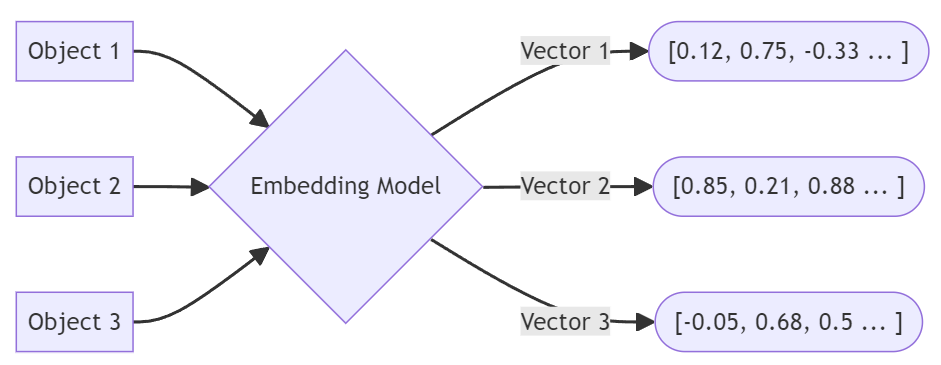

At their core, vector embeddings transform unstructured or abstract data into a high-dimensional numerical format that captures the essential characteristics and underlying meaning of that data.

[5rpwv2]

[hua7xe]

For example, instead of treating the word "cat" solely as text, an embedding encodes its semantic meaning—"cat" and "dog" will have nearby vectors due to their conceptual similarity, while "cat" and "car" will be distant.

[8pdi30]

[hua7xe]

This process is achieved by training machine learning models, often neural networks, on large datasets. The models learn to identify meaningful patterns and relationships within the data, distilling these relationships into the resulting embeddings.

[8pdi30]

[s6e72t]

This allows otherwise difficult-to-quantify similarities—such as emotional tone in text or visual resemblance in images—to become mathematically calculable.

Practical Examples and Use Cases

Vector embeddings are used extensively across industries:

- Natural Language Processing: Word embeddings (e.g., Word2Vec, GloVe) allow sentiment analysis, topic classification, and language translation by representing words and sentences as vectors. [8pdi30]

- Clustering and Anomaly Detection: Vector representations make it easier to group similar items or identify outliers by measuring mathematical distance between vectors. [s6e72t]

Benefits and Potential Applications

The key advantage of vector embeddings lies in their ability to map complex, high-dimensional data into a consistent numerical form, enabling:

- Semantic similarity measurement: Quantifies how similar two pieces of data are, mirroring human intuition. [s6e72t]

- Interoperability: Allows integration of disparate data types (text, images, user logs) into unified models. [5rpwv2]

This versatility has driven widespread adoption in fields like search, natural language understanding, e-commerce, and healthcare.

Challenges and Considerations

Despite their promise, vector embeddings come with challenges:

- Interpretability: The high-dimensional, abstract nature of embeddings makes them difficult for humans to interpret directly.

- Bias and ethical concerns: Models can inherit biases present in training data, leading to fairness and trust issues. [s6e72t]

- Computational resources: Training and using embeddings at scale requires significant computing power and storage.

Current State and Trends

Adoption of vector embedding technology is accelerating, driven by the growth of AI applications and the rise of specialized vector databases.

[hua7xe]

[s6e72t]

Key players include major cloud providers and AI platforms (e.g., Google, IBM, AWS), as well as dedicated vector search and storage solutions such as Pinecone, Weaviate, and Elasticsearch.

[5rpwv2]

[hua7xe]

[s6e72t]

Recent trends include:

- Expansion of embedding types: Beyond words and images, embeddings are now used for users, products, behaviors, and even multimodal data, improving personalization and new kinds of AI assistants. [8pdi30]

- Vector search at scale: Organizations are rapidly adopting vector databases to support flexible, real-time search and retrieval across massive datasets. [hua7xe]

- Integration with large language models (LLMs): Embeddings are essential for storing contextual knowledge and powering question-answering systems, chatbots, and AI copilots.

Future Outlook

Vector embeddings are poised to become even more critical as AI systems evolve toward deeper understanding and interaction with human language, images, and multimodal data. We can expect continued advances in self-supervised learning, interpretability, and real-time vector analysis, fundamentally transforming industries from healthcare and legal to entertainment and education.

Conclusion

Vector embeddings are revolutionizing how machines understand and utilize abstract data, enabling smarter, more intuitive applications. As technology advances, embedding techniques will drive the next wave of intelligent, responsive AI systems that adapt seamlessly to user needs.

Citations

[5rpwv2] 2025, Sep 23. What is Vector Embedding? | IBM. Published: 2024-06-12 | Updated: 2025-09-23

[8pdi30] 2025, Sep 21. What are Vector Embeddings? - Elastic. Published: 2023-08-07 | Updated: 2025-09-21

[hua7xe] 2025, Sep 23. Vector Embeddings Explained - Weaviate. Published: 2023-01-16 | Updated: 2025-09-23

[s6e72t] 2025, Sep 23. What are Vector Embeddings | Pinecone. Published: 2025-07-15 | Updated: 2025-09-23

[5]: 2025, Sep 21. Embedding (machine learning) - Wikipedia. Published: 2025-02-28 | Updated: 2025-09-21

[6]: 2025, Sep 23. A Beginner's Guide to Vector Embeddings | TigerData. Published: 2024-10-16 | Updated: 2025-09-23

[7]: 2025, Aug 29. What are embeddings in machine learning? - Cloudflare. Published: 2025-01-01 | Updated: 2025-08-29

[9]: 2025, Sep 23. Meet AI's multitool: Vector embeddings | Google Cloud Blog. Published: 2022-03-23 | Updated: 2025-09-23