Multimodal Models

(2025-11-26T14:23.804Z)

Perplexity Query

(2025-11-26T14:23

.804Z)

Question:

Write a comprehensive one-page article about "Multi-Modal Models".

Model: sonar-pro

Multimodal Models

Multimodal models are a type of Artificial Intelligence that can process and analyze multiple types of data—such as text, images, audio, and video—simultaneously. Unlike traditional AI systems that are limited to a single data type, multimodal models integrate information from various sources, enabling a more comprehensive understanding and more robust outputs. This approach is increasingly important as real-world problems often require combining different forms of data for accurate solutions.

Main Content

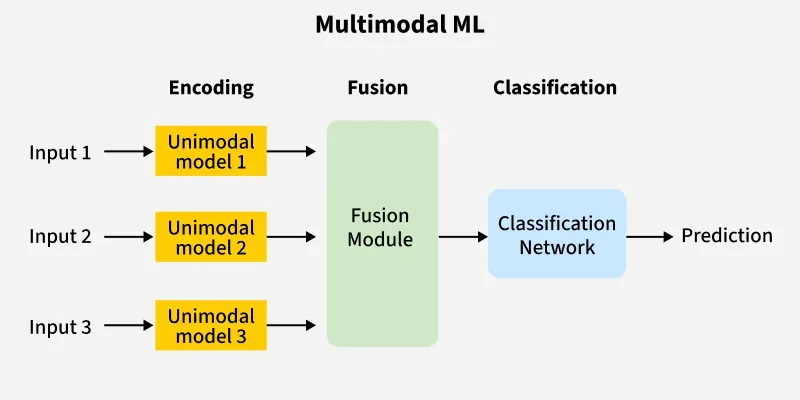

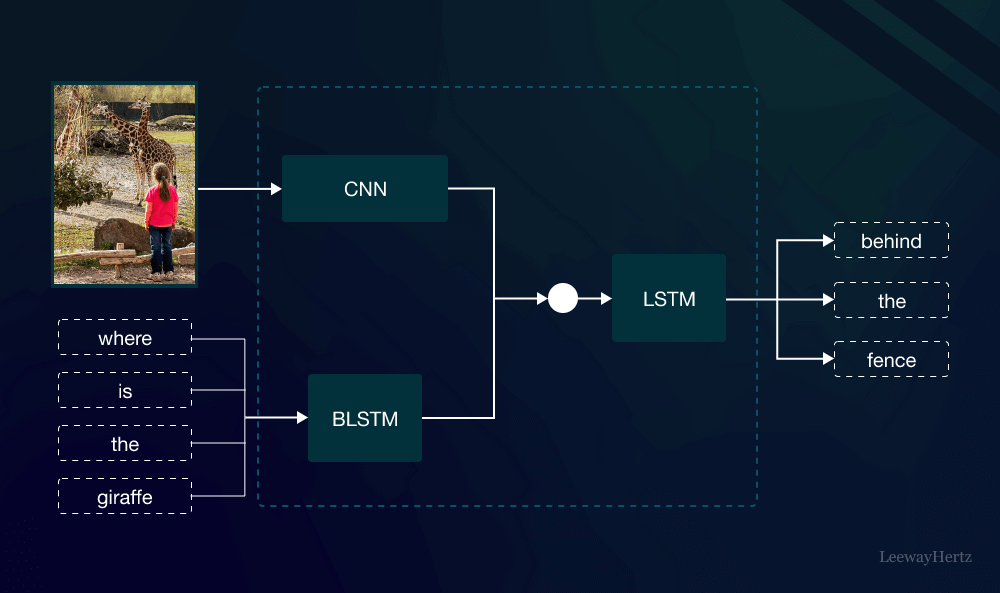

Multimodal models are built using deep learning techniques and are designed to handle multiple data modalities at once. Each modality—such as text, images, or audio—is processed by specialized neural networks, and the results are combined through a process called fusion. This fusion can happen at different stages: early (combining raw data), mid (merging features), or late (combining final outputs). By leveraging the strengths of each modality, these models can make more accurate predictions and generate richer outputs.

For example, in image captioning, a multimodal model analyzes both the visual content of an image and the context provided by accompanying text to generate a descriptive caption. In healthcare, multimodal models can combine medical images, patient records, and sensor data to improve diagnosis and treatment recommendations. Another practical application is in self-driving cars, where models process camera images, radar data, and GPS information to navigate safely.

The benefits of multimodal models include improved accuracy, robustness, and flexibility. They can handle noisy or incomplete data by relying on other modalities, making them more resilient in real-world scenarios. Applications span across industries: from customer service chatbots that understand both text and voice, to robotics that interpret visual and auditory cues, and even in entertainment for generating content that blends text, images, and audio.

However, developing multimodal models comes with challenges. Integrating different data types requires sophisticated fusion techniques and large, diverse datasets. Ensuring that the model can effectively learn the relationships between modalities is complex, and computational resources can be demanding. Additionally, aligning and synchronizing data from different sources, such as matching audio with corresponding video frames, adds another layer of difficulty.

Current State and Trends

Multimodal models are rapidly gaining adoption across various sectors. Major technology companies like Google, IBM, and Microsoft are investing heavily in multimodal AI, with products such as Google’s PaLM, IBM’s Watson, and Microsoft’s Azure AI offering multimodal capabilities. Large language models like GPT-4o have been extended to process and generate text, audio, and images, showcasing the trend towards more versatile AI systems.

Recent developments include the rise of large multimodal models (LMMs) that can handle a wide range of tasks and modalities. These models are being fine-tuned for specific applications, such as robotic control and advanced content generation. The integration of multimodal models with cloud platforms is also making them more accessible to businesses and developers.

Future Outlook

The future of multimodal models looks promising, with ongoing research focused on improving fusion techniques, reducing computational costs, and expanding the range of supported modalities. As these models become more sophisticated, they are expected to play a crucial role in creating AI systems that can interact with the world in more human-like ways, leading to breakthroughs in areas like personalized medicine, autonomous systems, and immersive virtual experiences.

Conclusion

Multimodal models represent a significant advancement in artificial intelligence, enabling systems to process and integrate diverse data types for more accurate and robust outcomes. As technology continues to evolve, these models will become increasingly integral to solving complex real-world problems and driving innovation across industries.

Citations

[1]: 2025, Oct 28. What are multimodal models? | Micron Technology Inc.. Published: 2025-03-28 | Updated: 2025-10-28

[2]: 2025, Nov 20. Multimodal Models: Types, Applications & Examples - Kanerika. Published: 2025-07-16 | Updated: 2025-11-20

[4]: 2025, Nov 26. Multimodal Machine Learning - GeeksforGeeks. Published: 2025-07-23 | Updated: 2025-11-26

[7]: 2025, Nov 24. Multimodal Models Explained - KDnuggets. Published: 2023-03-27 | Updated: 2025-11-24