Explainable AI

(2025-08-17T01:40.215Z)

Perplexity Query

(2025-08-17T01:40

.215Z)

Question:

Write a comprehensive one-page article about "Explainable AI".

Response from sonar-pro:

Unlocking Trust and Transparency in Machine Learning

Introduction

Explainable AI (XAI) refers to Artificial Intelligence systems that are designed to make their decisions and logic transparent, interpretable, and understandable for human users.

[eqf73w]

[ve56cc]

[wxku2f]

As AI increasingly permeates sectors like healthcare, finance, and transportation, the need for AI systems that can justify their outcomes has become critical for trust, safety, and ethical adoption.

[ve56cc]

[wxku2f]

This transparency is vital not only for building user confidence but also for meeting regulatory demands and ethical standards in decision-making.

Main Content

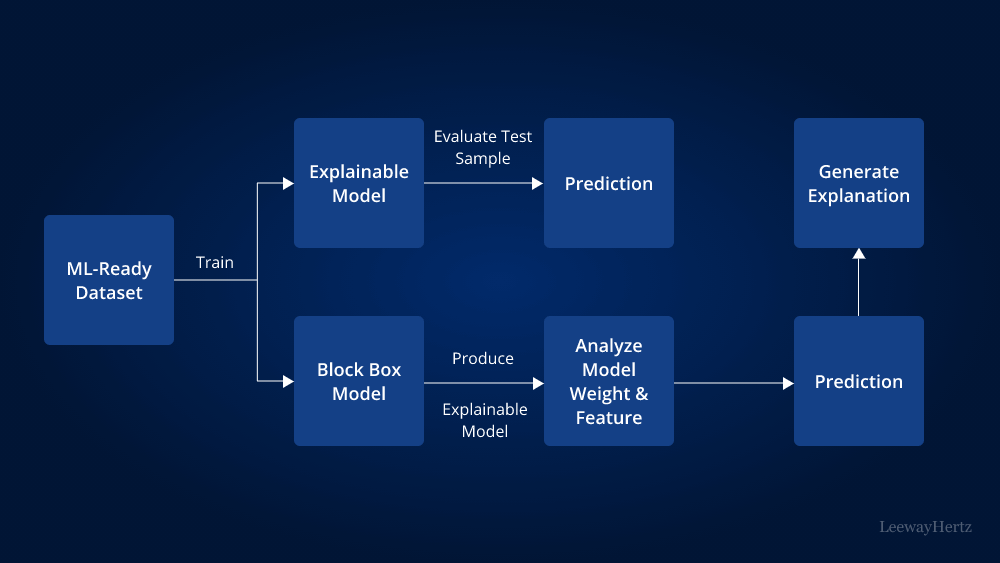

At its core, explainable AI is a paradigm that transforms how humans interact with complex machine learning models, moving beyond the traditional "black box" approach to one where decisions can be understood and scrutinized.

[eqf73w]

[ve56cc]

[3z6zqr]

In practice, this means XAI provides comprehensive explanations about how inputs lead to outputs, highlighting which factors influenced a prediction or recommendation. Unlike black-box systems, which often cannot justify their decisions even to their creators, XAI acts as a cognitive translator, aligning machine logic with human reasoning to foster true collaboration.

[eqf73w]

Practical examples of XAI abound. In healthcare, explainable AI models assist doctors by highlighting which elements of a medical scan led to an automated diagnosis.

[3z6zqr]

[qo6zlo]

For instance, a deep learning algorithm designed for cancer screening can not only predict risk levels but also generate heat maps or written rationales showing which anomalies led to these conclusions.

[3z6zqr]

[qo6zlo]

This allows clinicians to vet predictions, share transparent information with patients, and identify errors or biases. In finance, XAI helps banks comply with regulations by explaining why a loan application was approved or denied, supporting fair audits and enabling customers to challenge outcomes.

[3z6zqr]

[wxku2f]

Explainable AI provides significant benefits:

- Enhanced trust and adoption: Transparent reasoning enables stakeholders and end-users to confidently accept AI-driven recommendations. [wxku2f]

- Improved decision quality: Understanding model outputs allows organizations to validate results and make better-informed choices. [wxku2f]

- Bias detection and mitigation: XAI tools can help uncover hidden biases in data or model logic, facilitating fair and ethical use. [wxku2f]

- Accountability and compliance: Regulatory bodies increasingly demand transparency in AI; XAI helps organizations document and prove the fairness of automated decisions. [wxku2f]

- Stakeholder engagement: By making decisions interpretable to both technical and non-technical audiences, XAI fosters broader involvement in critical processes. [wxku2f]

Despite these advantages, XAI faces challenges. Achieving high levels of accuracy and performance often involves highly complex models that resist simple explanation.

[ve56cc]

There is no unified standard for what constitutes a sufficiently "good" explanation, and explanations must be tailored to different audiences—from regulators to consumers.

[qo6zlo]

Balancing interpretability with predictive power and user privacy remains an ongoing technical and philosophical debate in the AI community.

[qo6zlo]

[wxku2f]

Current State and Trends

Adoption of explainable AI is accelerating across industries that require transparency for legal, ethical, and business reasons, with healthcare, finance, insurance, and law at the forefront.

[eqf73w]

[3z6zqr]

[wxku2f]

Key players include IBM, Google, and Microsoft, all of whom are investing in XAI toolkits and platforms to address regulatory and stakeholder demands.

[ve56cc]

[wxku2f]

Recent technological advances have produced methods such as feature attribution visualizations, model-agnostic explanation frameworks (e.g., LIME and SHAP), and integrated explanation dashboards for real-time analysis.

[ve56cc]

As of mid-2025, market trends highlight rapid growth in XAI adoption, driven by stricter AI regulations, such as the EU AI Act and similar policies worldwide, that require transparency in high-risk applications. Organizations leveraging XAI report measurable gains in model accuracy, stakeholder trust, and operational profits due to improved error detection and user engagement.

[qo6zlo]

[wxku2f]

Future Outlook

Looking ahead, explainable AI is poised to become an industry standard rather than a differentiator. Advances in hybrid decision-making systems—where humans and machines collaborate seamlessly—will be powered by more sophisticated and intuitive explanation technologies.

[eqf73w]

As societal reliance on AI increases, XAI will be essential in bridging human and machine cognition, catalyzing ethical innovation, responsible deployment, and robust public trust.

Conclusion

Explainable AI fundamentally transforms how people interact with and trust artificial intelligence by making machine decisions transparent and interpretable. As technology advances and regulatory scrutiny intensifies, successful AI deployment will hinge on explainability, promising a future of trustworthy, ethical, and collaborative intelligent systems.

Citations

[eqf73w] 2025, Aug 06. What Is Explainable AI (XAI)? - Palo Alto Networks. Published: 2020-01-01 | Updated: 2025-08-06

[ve56cc] 2025, Jul 30. What is Explainable AI (XAI)? - IBM. Published: 2023-03-29 | Updated: 2025-07-30

[3z6zqr] 2025, Aug 06. What is Explainable AI? - Software Engineering Institute. Published: 2022-01-17 | Updated: 2025-08-06

[qo6zlo] 2025, Feb 02. The Fundamentals of Explainable AI and its Importance. Published: 2022-08-17 | Updated: 2025-02-02

[wxku2f] 2025, Jul 17. Benefits Of Explainable AI - Meegle. Published: 2025-07-08 | Updated: 2025-07-17