Data Quality Management

(2025-08-11T20:09.641Z)

Perplexity Query

(2025-08-11T20:09

.641Z)

Question:

Write a comprehensive one-page article about "Data Quality Management".

Structure the article as follows:

- Introduction (2-3 sentences)

- Define the term and its significance

- Provide context for why it matters

- Main Content (3-4 paragraphs)

- Explain the concept in detail

- Include practical examples and use cases

- Discuss benefits and potential applications

- Address any challenges or considerations

- Current State and Trends (1-2 paragraphs)

- Discuss current adoption and market status

- Mention key players or technologies

- Highlight recent developments

- Future Outlook (1 paragraph)

- Predict future developments

- Discuss potential impact

- Conclusion (1-2 sentences)

- Summarize key points

- End with a forward-looking statement

Important Guidelines:

- Keep the total length to approximately one page (500-800 words)

- Use clear, accessible language

- Include specific examples and real-world applications

- Make it engaging and informative for a general audience

- Use markdown formatting for structure

Image References:

Include

after the introduction.

Include

.webp)

after the main content section.

Include

before the conclusion.

Replace "Data Quality Management" with the actual vocabulary term in the prompt.

Model:

sonar-pro

Response from sonar-pro:

Data Quality Management is the discipline of ensuring that organizational data is accurate, consistent, complete, timely, and fit for its intended use, through coordinated processes, people, and technologies[1][5]. It matters because decisions, automation, and analytics are only as reliable as the underlying data; poor quality leads to wasted spend, missed opportunities, compliance issues, and eroded trust[1][3].

Introduction

- Data Quality Management (DQM) provides a systematic framework to profile, validate, cleanse, and monitor data so it remains dependable throughout its lifecycle[2][5].

- In a world of real-time analytics and AI-driven operations, DQM underpins everything from customer personalization to regulatory reporting, reducing risk and improving outcomes[2][3].

Main Content

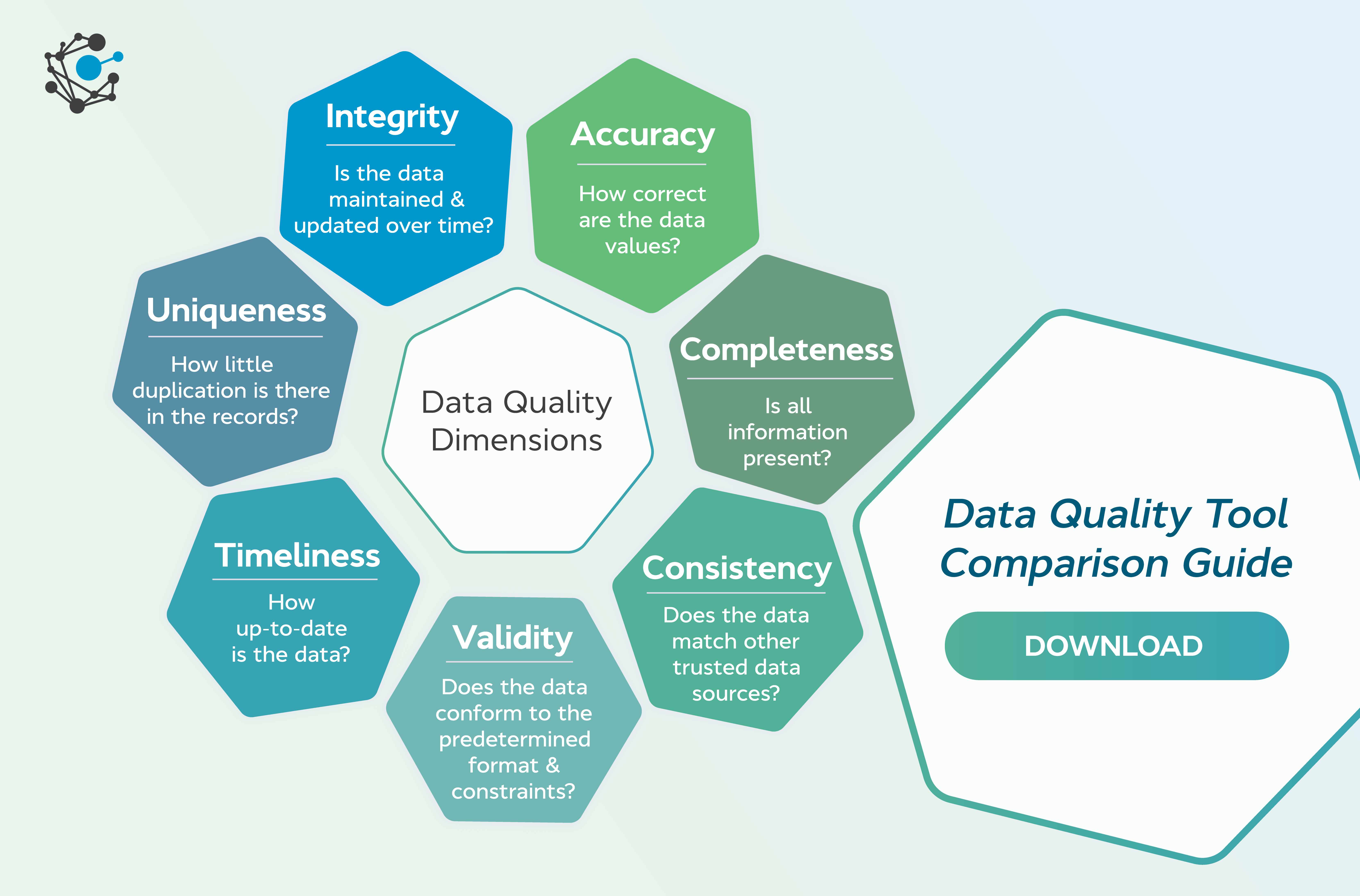

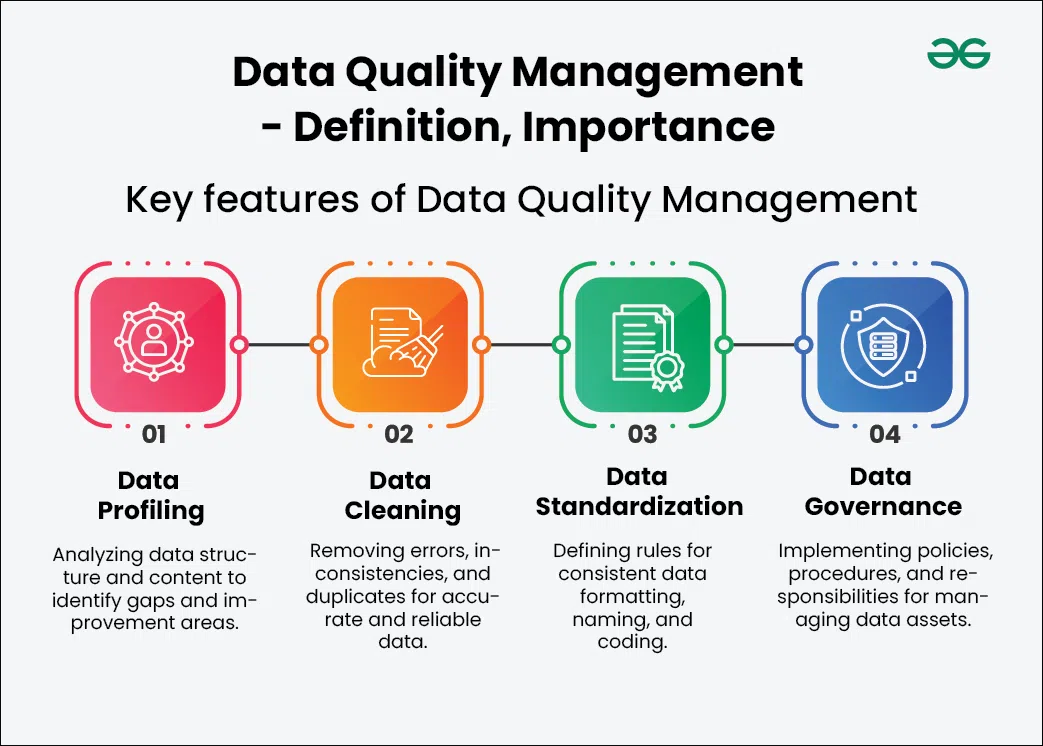

- At its core, DQM operationalizes key quality dimensions—such as accuracy, completeness, consistency, validity, and timeliness—via repeatable processes and controls[1][3]. Typical activities include data profiling (to understand content and spot anomalies), cleansing (to fix duplicates, standardize formats, and correct errors), validation (to enforce business rules), and continuous monitoring (to detect and prevent regressions)[2][3]. Effective programs align metrics and rules with business objectives, recognizing that acceptable thresholds vary by domain and use case[2][5].

- Practical examples span industries. In retail, deduplication and standardization of customer records improve churn modeling and campaign ROI; ongoing monitoring catches schema or feed changes before they corrupt dashboards[2][3]. In healthcare, validating patient identifiers and medication codes improves care coordination and reduces claims denials[3]. In finance, timeliness and accuracy controls on transaction data support fraud detection and regulatory reporting; automated anomaly detection flags outliers in near real time[2][3]. Even back-office functions benefit: cleansing supplier master data reduces payment errors and strengthens procurement analytics[3][5].

- The benefits are multifold: better decision-making from trustworthy analytics, higher operational efficiency through fewer data-related reworks, improved customer experiences via consistent, personalized interactions, and stronger compliance through auditable controls[1][3]. Organizations also unlock advanced use cases—like predictive maintenance or AI-driven recommendations—because high-quality training and inference data boosts model performance and reliability[2][3].

- Challenges include fragmented data sources, evolving schemas, unclear ownership, and changing business rules. Many companies struggle to define common standards across departments and to embed stewardship responsibilities in day-to-day workflows[3][5]. Traditional, batch-only checks can bottleneck real-time pipelines; modern approaches add proactive monitoring, automated anomaly detection, and self-updating validation rules to keep pace with streaming and microservices architectures[2].

Current State and Trends

- Adoption has broadened beyond IT to data product teams, risk, marketing, and operations, driven by analytics, AI initiatives, and regulatory pressure. Contemporary DQM emphasizes lifecycle coverage—from ingestion to consumption—and treats data quality as a continuous capability with SLAs and KPIs, not a one-off project[2][3]. Many organizations are formalizing quality metrics (e.g., match rates, null percentages, freshness) and embedding them in governance dashboards[3][5].

- Key technologies include data profiling and cleansing tools, validation rules engines, observability platforms, and ML-enhanced anomaly detection for streaming and batch data[2][5]. Notable approaches include automated quality scoring, pattern recognition to spot subtle inconsistencies, and AI-assisted rule generation that adapts as business requirements evolve[2]. Vendors and open tooling increasingly integrate DQM into ingestion, ELT, and orchestration layers to reduce latency between issue detection and remediation[2][5].

- Recent developments feature proactive monitoring of data contracts, real-time alerts on schema drift, and predictive quality metrics that anticipate trouble before it hits downstream dashboards and models[2]. This shift helps teams maintain reliability in complex, distributed data stacks where manual checks are insufficient[2][3].

Future Outlook

- Expect DQM to become productized and “shift-left”: quality checks will be codified alongside data transformations, with policy-as-code and automated enforcement in CI/CD for data[2][5]. AI will further improve automated rule discovery, entity resolution, and outlier triage, while data contracts and observability will make quality visible and measurable to stakeholders. As more enterprises operationalize AI, DQM will be essential infrastructure, directly impacting model accuracy, regulatory compliance, and customer trust[2][3].

Conclusion

- Strong Data Quality Management aligns people, processes, and technology to deliver reliable data for decisions, operations, and AI at scale[1][2][3].

- Organizations that embed continuous, automated DQM will move faster with fewer risks, turning data into a sustained competitive advantage[2][5].

Citations

[1]: 2025, Jul 27. Data Quality Management - Definition, Importance - GeeksforGeeks. Published: 2025-07-23 | Updated: 2025-07-28

[2]: 2025, Jul 28. What Is Data Quality Management: Framework & Best Practices. Published: 2025-07-28 | Updated: 2025-07-29

[3]: 2025, Jul 23. What is Data Quality and Why is it Important? - GeeksforGeeks. Published: 2025-07-23 | Updated: 2025-07-24

[4]: 2025, Aug 10. What is Data Quality Management? Why is it important?. Published: 2025-07-17 | Updated: 2025-08-11

[5]: 2024, Sep 26. Data Quality Management What, Why, How - Best Practices. Published: 2024-08-21 | Updated: 2024-09-27