Context Window

Related Content

(2025-08-16T23:33.785Z)

Perplexity Query

(2025-08-16T23:33

.785Z)

Question:

Write a comprehensive one-page article about "Context Window".

Response from sonar-pro:

The Memory Frame of Modern AI

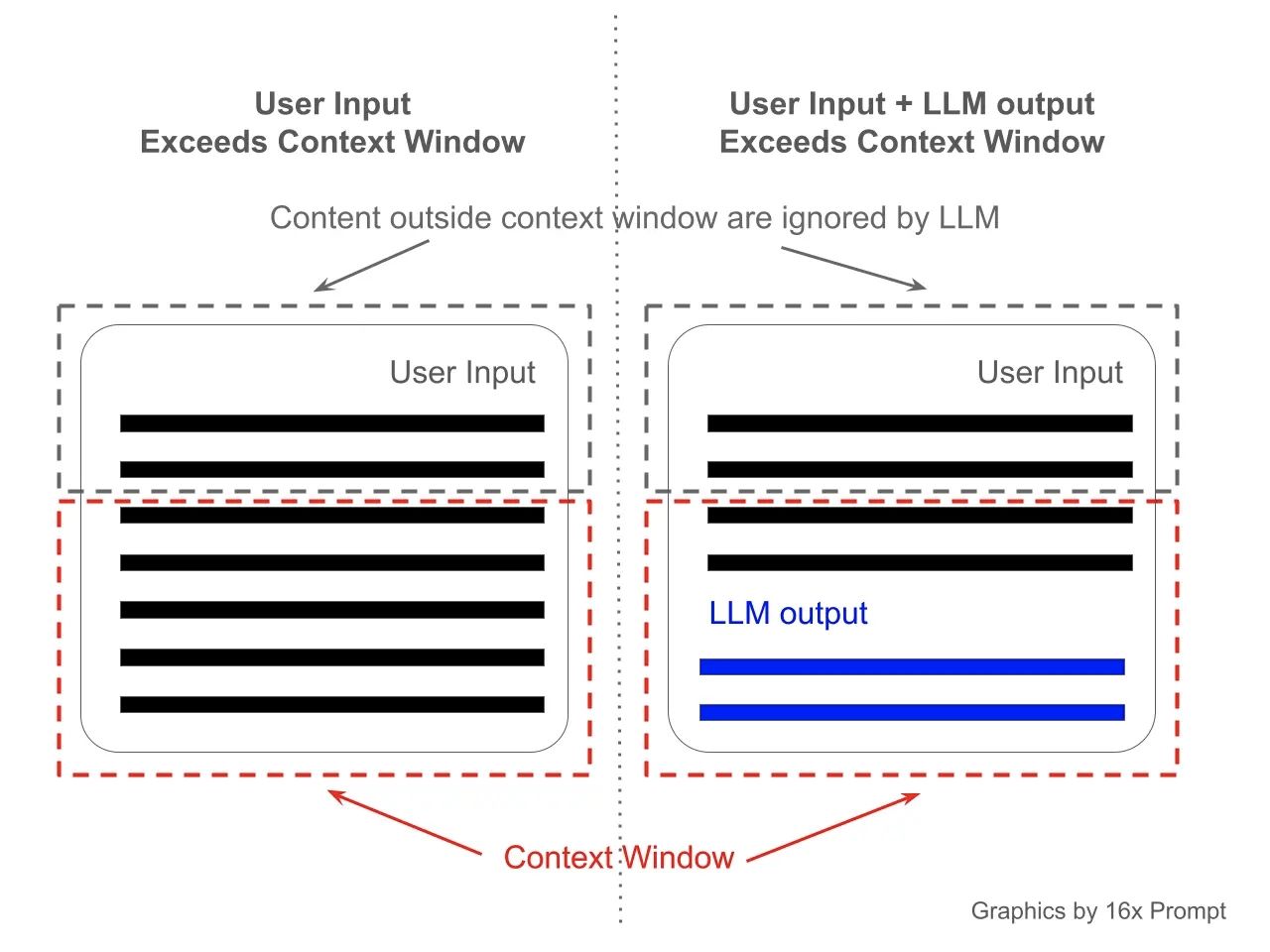

A context window refers to the amount of information—measured as words, tokens, or characters—that an AI Model, such as a large language model (LLM), can consider while generating a response or making a prediction.

[l58ck7]

[9klejp]

[f01kqt]

The significance of the context window lies in how it defines the machine’s “working memory”: it determines how much input the AI can store, recall, and use at any one time, directly impacting the accuracy, relevance, and usefulness of its outputs.

[sser6w]

[9klejp]

As AI models become integrated into applications ranging from virtual assistants to document analysis, the context window is critical for ensuring these systems process information in a way that mirrors human reasoning and conversation.

[sser6w]

The Fundamentals of Context Window

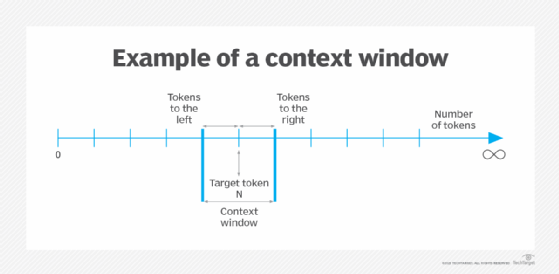

At its core, the context window is a fixed-size frame surrounding a target token or word in a sequence.

[8jrlzq]

In the realm of natural language processing (NLP), this sets the boundaries for how much previous and subsequent information the model considers when generating a response. For example, in the sentence “The cat sat on the mat,” if the model analyzes the word “sat” with a window size of 2, it reviews the two preceding and two following words (“The,” “cat,” “on,” “the”). This range helps the model discern the meaning of “sat” based on immediate context.

[8jrlzq]

In practical terms, context windows are vital for tasks like summarizing lengthy documents, answering multi-step questions, or sustaining real-time conversations. For example, if you ask an AI model to read several paragraphs and explain the main ideas, the context window determines how many of those paragraphs can be considered before information is lost or forgotten.

[sser6w]

In platforms like ChatGPT, Gemini, or Claude, the model’s context window powerfully influences whether it can process complex documents, intricate instructions, or multi-part dialogs without losing track.

[9klejp]

Applications and Benefits

- Text Summarization: Larger context windows enable models to summarize entire articles or multipart conversations accurately. [sser6w]

- Conversational AI: Chatbots and virtual assistants benefit from extended memory, making interactions seem more coherent and “human-like”. [9klejp]

- Legal and Technical Analysis: Enterprises use LLMs with large context windows to parse contracts, manuals, or data sheets, extracting relevant information and providing structured answers. [9klejp]

- AI Powered Content Generation: Writing tools leverage wide context windows to maintain style and substance across longer pieces of text.

Increasing the context window size results in several benefits:

- Enhanced ability to process nuanced, multi-topic prompts

- Improved accuracy and coherence in responses

However, challenges arise with scaling context windows. As window sizes grow, computational complexity and resource requirements can increase substantially, making it difficult to maintain efficiency and cost-effectiveness. In individual working sessions with an AI Model, Context Rot is common. Architectural advances—such as those underlying new transformer-based models—are helping to overcome this, though there are trade-offs.

[f01kqt]

Current State and Industry Trends

The size of context windows has grown dramatically in recent years. Early LLMs like

- GPT-3 managed about 2,000 tokens (approximately 1,500 words), sufficient for basic dialog but restrictive for enterprise use. [9klejp]

- Today, leading models boast context windows ranging from 100,000 tokens (Anthropic’s Claude) to two million tokens (Google’s Gemini). [9klejp] This allows processing of hundreds or even thousands of pages at a time.

Key industry players driving context window innovation include OpenAI (ChatGPT), Google (Gemini), and Anthropic (Claude), as well as specialist firms like Appen which offer support for context window optimization and benchmarking.

[f01kqt]

Recent breakthroughs in model architectures have made it possible to increase window sizes without proportional increases in memory and processing costs, signaling continued progress in this domain.

[f01kqt]

The Future of Context Windows

Looking ahead, context windows will likely grow even larger, enabling AI models to work with entire books, datasets, or continuous conversations while maintaining contextual awareness. This will open doors to new kinds of applications—from educational tutoring and scientific research to collaborative writing and enterprise document management. The impact will be a new generation of AI systems that “remember” better, analyze deeper, and interact more naturally, further bridging the gap between human and machine intelligence.

In summary, the context window shapes the effectiveness, scope, and potential of modern AI models. As technology evolves, ever-expanding context windows promise richer, more meaningful interactions and deeper insight—bringing machines closer to truly understanding human language and knowledge.

Citations

[l58ck7] 2025, Jun 16. What is a context window? - TechTarget. Published: 2023-10-10 | Updated: 2025-06-16

[sser6w] 2025, May 15. What Is an AI Context Window? - Coursera. Published: 2025-07-02 | Updated: 2025-05-15

[8jrlzq] 2025, Jun 16. Context Window: The Essential Guide | Nightfall AI Security 101. Published: 2025-01-01 | Updated: 2025-06-16

[9klejp] 2025, Jun 16. What is a context window for Large Language Models? - McKinsey. Published: 2024-12-05 | Updated: 2025-06-16

[f01kqt] 2025, Jun 16. Understanding Large Language Models Context Windows - Appen. Published: 2024-04-11 | Updated: 2025-06-16